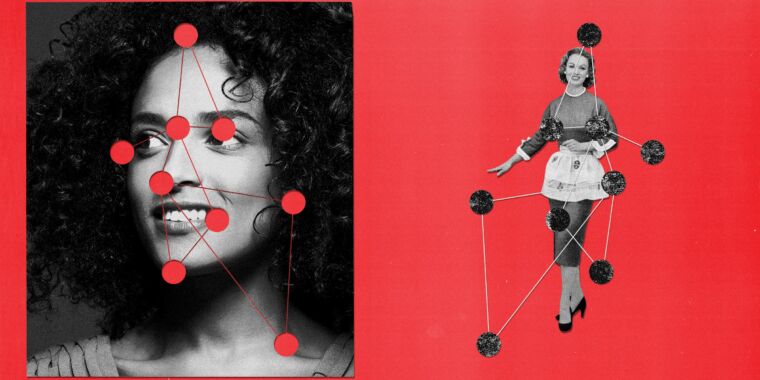

When AI sees a man, it thinks ?official.? A woman? ?Smile?

Companies, agencies, institutions, etc

Profile

GESIS Leibniz Institute

the Social Sciences

New York University

American University

University College Dublin

University of Michigan

California YIMBY.The

Google’s

Amazon

Microsoft

Crowdworkers

IBM

AI

companies’

Border Patrol

World Press Photo

Princeton

Open Images

Condé Nast

Affiliate Partnerships

People

Tom SimoniteTo

Profile

Carsten Schwemmer

Steve Daines

Lucille Roybal-Allard

Ling Ling Chang

Tracy Frey

Olga Russakovsky

’d

Cathleen Galgiani

Jim Beall

Groups

European

Black

Republican

Honduran

Physical locations

Google’s

Places

No matching tags

Locations

US

Köln

Germany

California

Texas

Russakovsky

Princeton

Events

No matching tags

Summary

Crowdworkers were paid to review the annotations those services applied to official photos of lawmakers and images those lawmakers tweeted.Google's AI image recognition service tended to see men like senator Steve Daines as businesspeople, but tagged women lawmakers like Lucille Roybal-Allard with terms related to their appearance.The AI services generally saw things human reviewers could also see in the photos. A company that used a skewed AI service to organize a large photo collection might inadvertently end up obscuring women businesspeople, indexing them instead by their smiles.When this image won World Press Photo of the Year in 2019 one judge remarked that it showed "violence that is psychological." Google's image algorithms detected "fun."Prior research has found that prominent datasets of labeled photos used to train vision algorithms showed significant gender biases, for example showing women cooking and men shooting. “So we’d better make sure they’re doing the right things in the world and there are no unintended downstream consequences.”An academic study and tests by WIRED found that Google's image recognition service often tags women lawmakers like California state senator Cathleen Galgiani with labels related to their appearance, but sees men lawmakers like her colleague Jim Beall as businesspeople and elders.One approach to the problem is to work on improving the training data that can be the root cause of biased machine learning systems. Russakovsky is part of a Princeton project working on a tool called REVISE that can automatically flag some biases baked into a collection of images, including along geographic and gender lines.When the researchers applied the tool to the Open Images collection of 9 million photos maintained by Google, they found that men were more often tagged in outdoor scenes and sports fields than women.

As said here by Tom Simonite, wired.com